P-value and Significance in Particle Physics

Combination Formular: n choose k

There are \(n\) indistinguishable coins and \(k\) heads. We want to calculate the number of combinations for \(k\) heads in \(n\) coins.

For the first head, there are \(n\) choices to put the first head. After putting the first head, there are \(n-1\) choices left, so there are \(n-1\) choices to put the second head. Similarly, there will be $$n(n-1)...(n-k+1)$$ ways to put \(k\) heads in \(n\) coins.

If the coins are distinguishable, we end here. However, they are not.

For each \(k\) heads in \(n\) coins in the \(n(n-1)...(n-k+1)\) ways, since the coins are indistinguishable, putting the first head in the second head position and the second head in the first head position is the same combination as putting the first head in the first head position and the second head in the second head position. We know that \(n(n-1)...(n-k+1)\) ways have duplicated cases and we need to eliminate them.

For the first case of \(k\) heads in \(n\) coins, there are \(k\) choices to put the first head. After putting the first head, there are \(k-1\) choices left, so there are \(k-1\) choices to put the second head. Similarly, there will be $$k(k-1)...(1) = k!$$ ways to put the \(k\) heads in each case of \(k\) heads in \(n\) coins.

Since each case of \(k\) heads in \(n\) coins has been duplicated by \(k!\) times, the actual combinations of \(k\) heads in \(n\) coins should be divided by \(k!\), i.e. $$\frac{n(n-1)...(n-k+1)}{k!} = \frac{n!}{k!(n-k)!} = C^n_k.$$

Poisson Distribution

Let us generalize the idea of getting \(x\) heads in flipping coins for \(n\) times as having \(x\) successes in \(n\) trials.

If the probability of obtaining a success is \(p\), then there are \(_nC_k\) different ways of distributing \(x\) successes with probability \(p^x\) and \((n − x)\) failures with probability \((1 − p)^(n − x)\) in a sequence of \(n\) trials. The probability of obtaining \(x\) successes out of \(n\) trials will be $$b(x;b,p) = \frac{n!}{(n-x)!x!}p^x(1-p)^{n-x}.$$

The expected outcome value should be \(np\). Define $$\mu \equiv np.$$ When \(n\) is large, the probability density can be shown to be $$\lim(n\to\infty)b(x;n,p)=\frac{\mu^xe^{-\mu}}{x!}.$$ This is called the Poisson distribution.

P-value

Think of the billions of collisions of particles inside a large hadron collider as \(n\) trials, and the number of the observed particles as success, we can calculate the probability of the observed particles as being just some data fluctuation, as a way measure how likely the particle found in the collider really is the particle we are looking for.

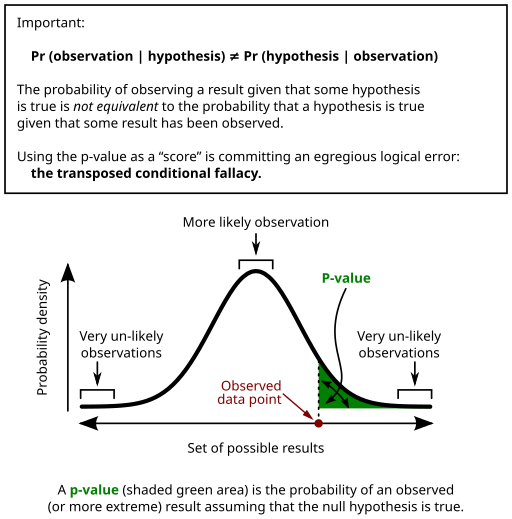

Assuming the null hypothesis is true, i.e. there exist only background events, the probability of measuring at least as the observed data point is the integration of the probability density from the observed data point to infinity If p-value is small, the probability of null hypothesis is true is small

Integration from zero to infinity, refers to probability including all possible cases, is equal to 1 The cumulative distribution function (cdf), is defined as (1 − p), the remaining probability other that the cases included in p-value. Significance is defined as the inverse of the cdf

Expected Significance

\(\mu\) = expected value after measuring a lot of times is the background events calculated using the Standard Model (number of events in blue area + purple area)

\(x\) = number of success, that is expected number of events to be able to be measured (number of events in blue area + purple area + red area)

p-value is the probability of measuring at least \(x\): the integration of the probability density given by Poisson distribution from the x to infinity

Inverse of the cumulative distribution function (cdf) gives the expected significance

Observed Significance

\(\mu\) = expected value of after measuring a lot of times is the background events calculated using the Standard Model (number of events in blue area + purple area)

\(x\) = number of success (data point value)

p-value is the probability of measuring at least \(x\): the integration of the probability density given by Poisson distribution from the x to infinity

Inverse of the cdf gives the observed significance

Comments

Post a Comment